Research

Research Agenda

Our research follows two general streams:

- Developing new Bayesian methods through the emerging generation of generative AI.

- Crafting computational models of complex systems to gain insights from (big) data.

These two streams converge at the BayesFlow framework for Bayesian inference with modern deep learning. The list below provides a small, but representative sample of our recent research outcomes.

Recent Papers

2026

BayesFlow 2.0: Multi-Backend Amortized Bayesian Inference in Python

arXiv

·

10 Feb 2026

·

arXiv:2602.07098

We present the new BayesFlow 2.0 framework for general-purpose amortized Bayesian inference. Along with direct posterior, likelihood, and ratio estimation, the software includes support for multiple popular deep learning backends, a rich collection of generative networks for sampling and density estimation, complete customization and high-level interfaces, as well as new capabilities for hyperparameter optimization, design optimization, and hierarchical modeling.

Diffusion Models in Simulation-Based Inference: A Tutorial Review

arXiv

·

30 Jan 2026

·

arXiv:2512.20685

In this tutorial review, we synthesize recent developments on diffusion models in SBI, covering design choices for training, inference, and evaluation. We highlight opportunities created by various concepts such as guidance, score composition, flow matching, consistency models, and joint modeling. We also discuss how efficiency and statistical accuracy are affected by noise schedules, parameterizations, and samplers. Concepts are illustrated with case studies across various problems.

2025

JADAI: Jointly Amortizing Adaptive Design and Bayesian Inference

arXiv

·

30 Dec 2025

·

arXiv:2512.22999

We introduce JADAI, a unified amortized framework for sequential experiment design and Bayesian inference that learns end-to-end how to choose experimental settings that shape the observation process and how to update the posterior uncertainty over the parameters as data accumulates. JADAI trains a policy, a history encoder, and a posterior estimator jointly and achieves superior or competitive performance on adaptive design benchmarks.

Modeling multi-agent motion dynamics in immersive rooms

arXiv

·

22 Dec 2025

·

arXiv:2511.08763

Computational models can help us understand the underlying mechanism of human interactions in spatially augmented reality systems. In a first application of simulation-based inference (SBI) in human-building interaction, we develop a new agent-based model of emergent human motion in immersive rooms, with agents simultaneously influenced by their surrounding virtual world and their local neighbors. Using SBI, we show that the governing parameters of motion patterns can be estimated from simple observables. Our approach paves the way for creating adaptive interfaces for intelligent built environments.

Compositional amortized inference for large-scale hierarchical Bayesian models

arXiv

·

02 Oct 2025

·

arXiv:2505.14429

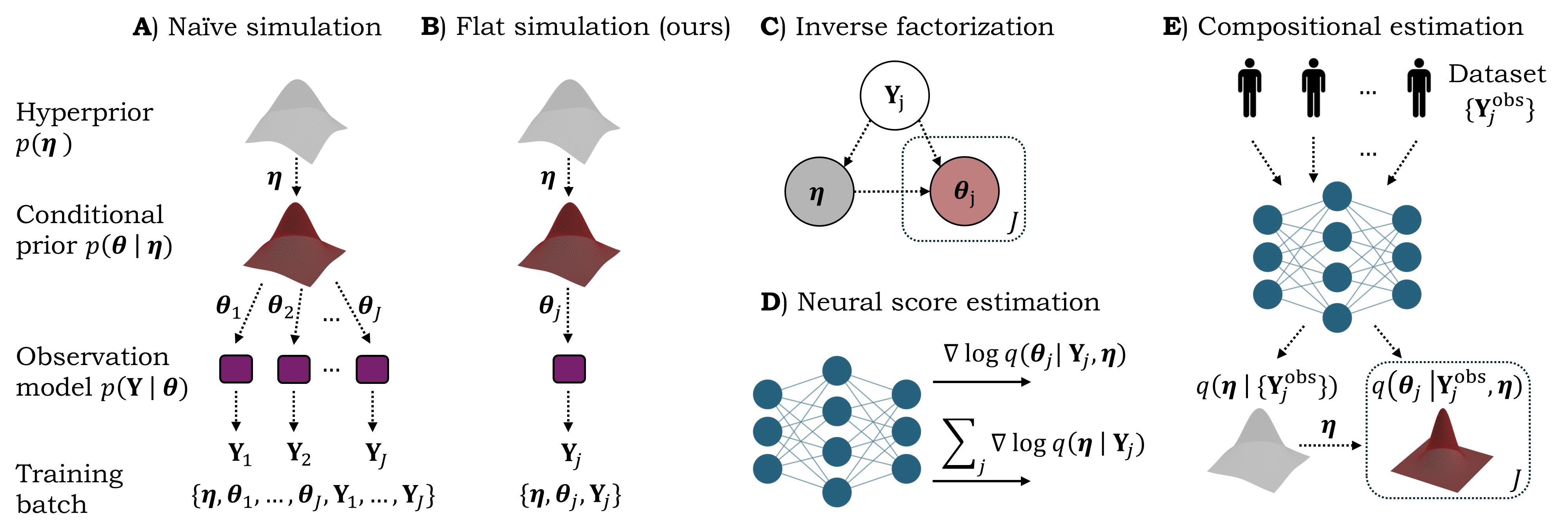

Hierarchical Bayesian modeling is the default approach in Bayesian analysis. However, existing Bayesian methods struggle to fit hierarchical models with many parameters on massive data sets. To address this issue, we develop a divide-and-conquer method based on score-based diffusion models. Our method scales to hundreds of thousands of parameters and enables fully Bayesian fluoresence lifetime imaging (FLI) under low signal-to-noise conditions.

Simulations in Statistical Workflows

arXiv

·

27 Aug 2025

·

arXiv:2503.24011

Simulations play important and diverse roles in statistical workflows, for example, in model specification, checking, validation, and inference. Over the past decades, the application areas and overall potential of simulations in statistical workflows have expanded significantly, driven by the development of new AI-assisted methods and exponentially increasing computational resources. In this paper, we examine past and current trends in the field and offer perspectives on how simulations may shape the future of statistical practice.

Does Unsupervised Domain Adaptation Improve the Robustness of Amortized Bayesian Inference? A Systematic Evaluation

arXiv

·

21 May 2025

·

arXiv:2502.04949

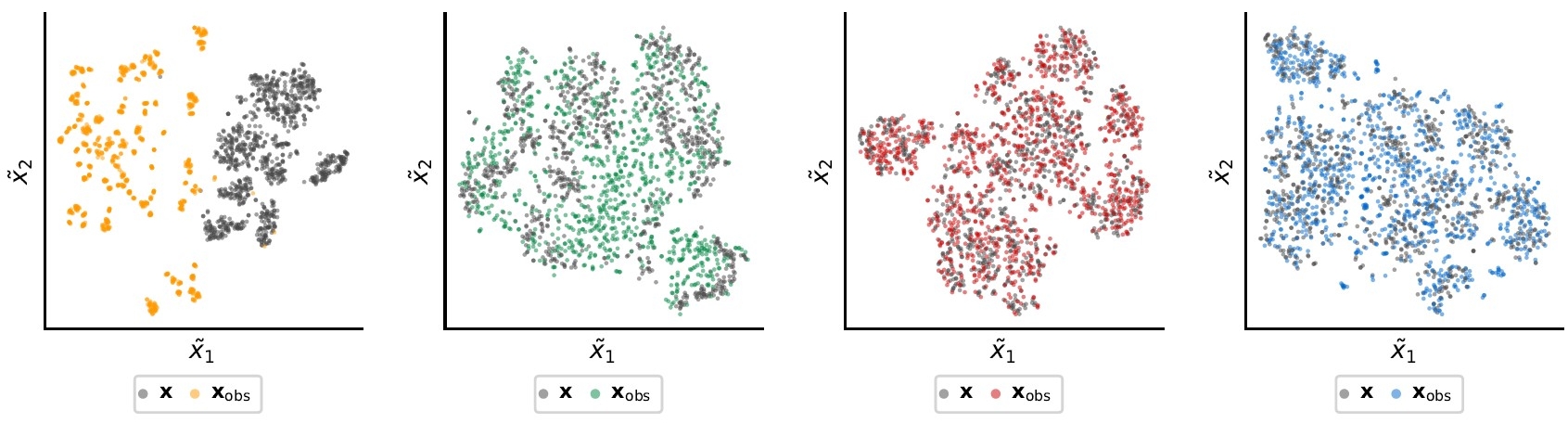

Neural networks are fragile when confronted with data that significantly deviates from their training distribution. This is true in particular for models trained on simulated data and deployed for inference on real-world observations. We formalize the targets of trustworthy inference and systematically test unsupervised domain adaptation (UDA) methods that align embeddings of simulated and real data. We find that UDA reduces noise-related errors, but can cause unexpected failures when the model’s prior assumptions are wrong.

Scaling Cognitive Modeling to Big Data: A Deep Learning Approach to Studying Individual Differences in Evidence Accumulation Model Parameters

Center for Open Science

·

24 Jan 2025

·

10.31219/osf.io/ge83u

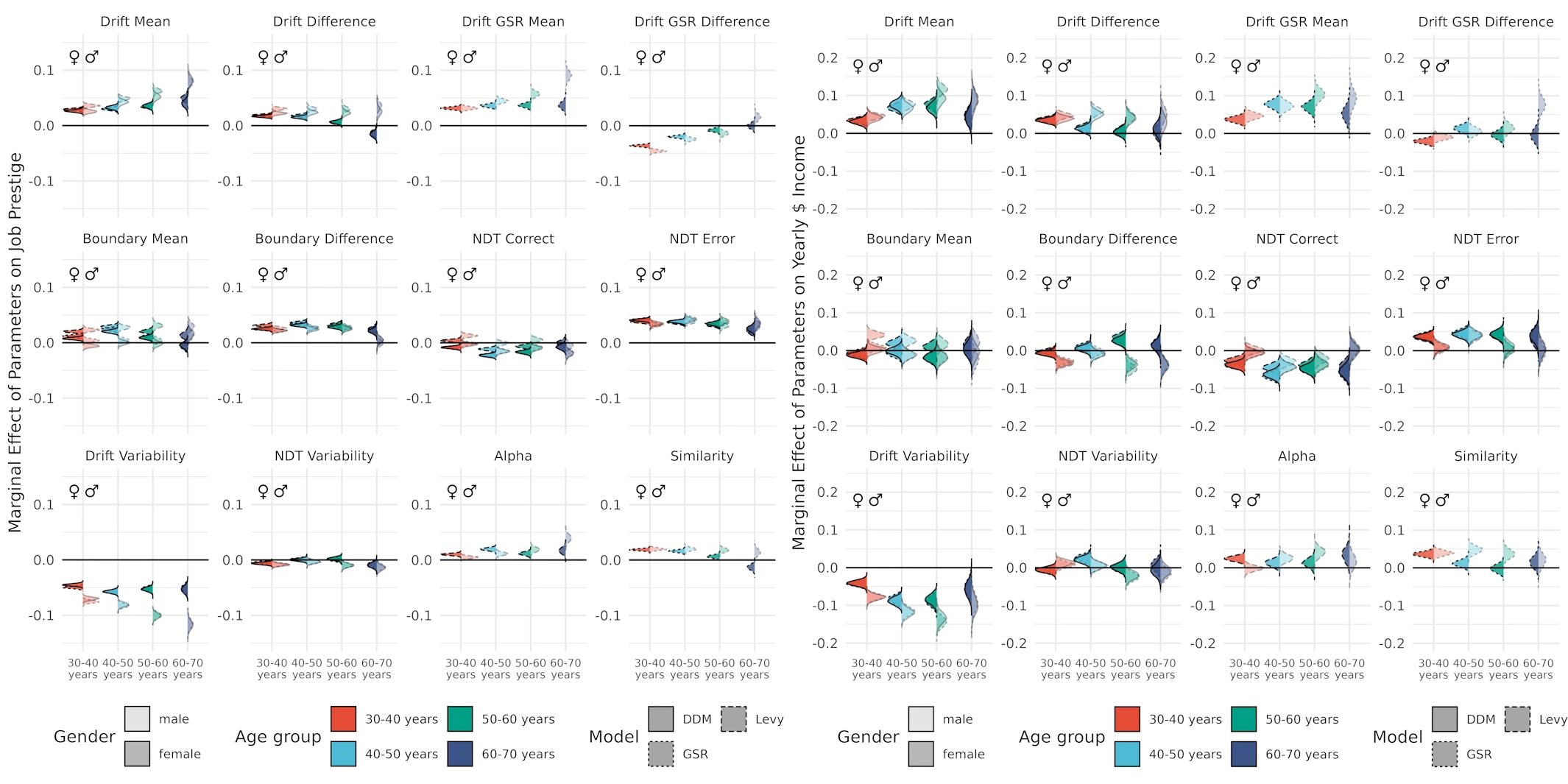

Cognitive models can estimate latent parameters from reaction time and accuracy data, capturing aspects like processing speed and decision caution. We examined how these parameters relate to real-world outcomes—education, income, and job prestige—in a large online sample. While overall effects were small, variability in processing speed (drift rate) was the strongest and most consistent predictor, highlighting the value of behavioral data science for generating new hypotheses about overlooked measures in cognitive modeling.

2024

Consistency Models for Scalable and Fast Simulation-Based Inference

arXiv

·

05 Nov 2024

·

arXiv:2312.05440

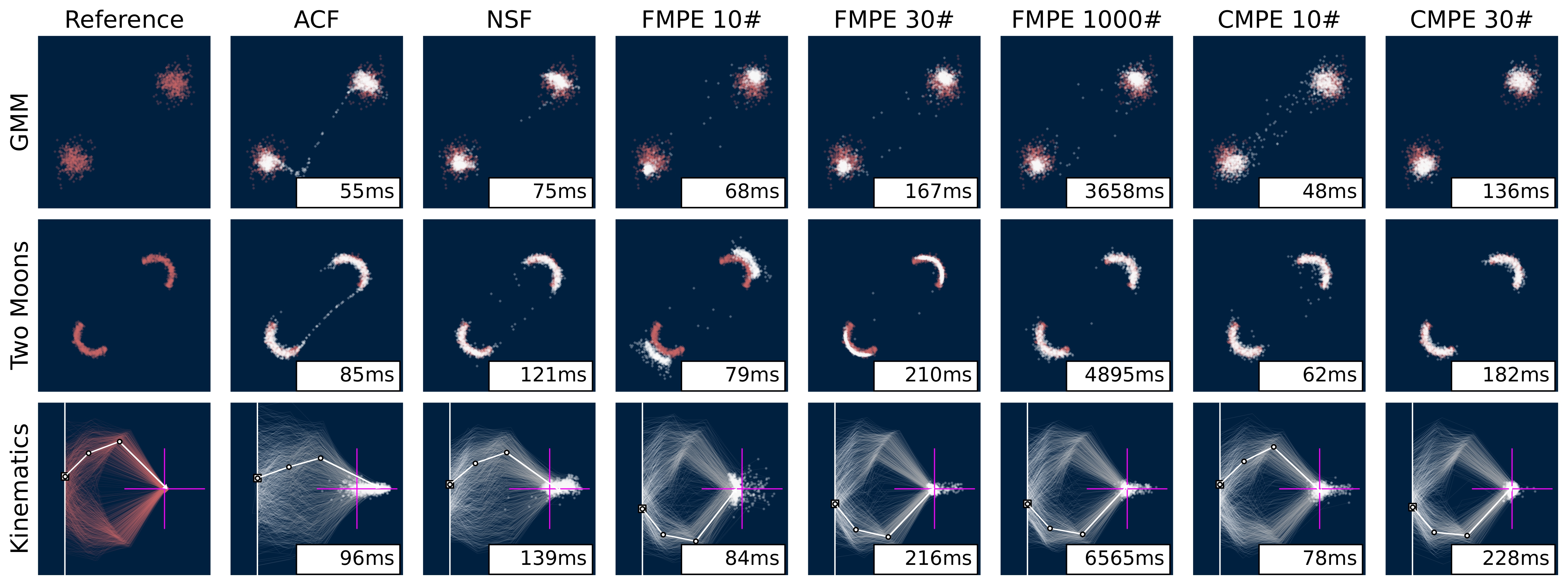

We present consistency models for posterior estimation (CMPE), a new free-form generative model family grounded in diffusion model theory. CMPE enables rapid few-shot inference with an unconstrained architecture that can be flexibly tailored to the model’s symmetry. We provide hyperparameters that support consistency training over a wide range of different dimensions, including low-dimensional ones which are important in simulaton-based inference but were previously difficult to tackle.

Validation and Comparison of Non-stationary Cognitive Models: A Diffusion Model Application

Computational Brain & Behavior

·

08 Oct 2024

·

10.1007/s42113-024-00218-4

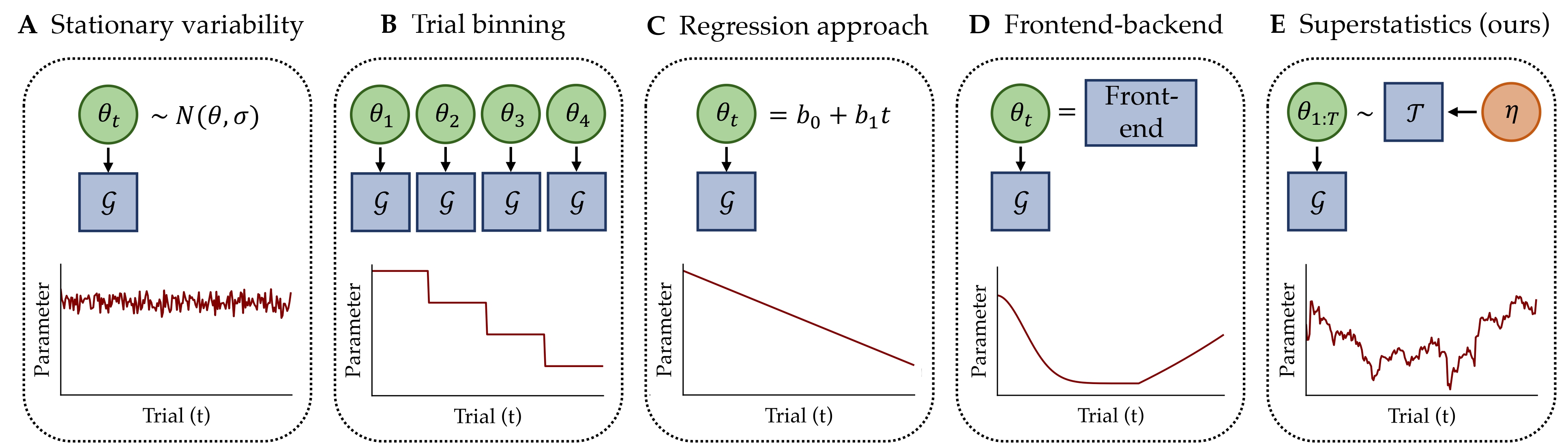

Cognitive processes naturally fluctuate over time, and superstatistics offer a flexible way to capture these non-stationary dynamics in cognitive models. We provide the first experimental validation of this approach by comparing four time-varying diffusion decision models in a perceptual task with controlled difficulty and speed-accuracy conditions. Using deep learning for efficient Bayesian estimation, we find that models allowing both gradual and abrupt parameter changes best match the data, suggesting that the inferred dynamics reflect genuine shifts in cognitive states.

Sensitivity-Aware Amortized Bayesian Inference

arXiv

·

29 Aug 2024

·

arXiv:2310.11122

Sensitivity analyses can reveal how different modeling choices affect statistical results, but they’re often too slow for complex Bayesian models. We introduce sensitivity-aware amortized Bayesian inference (SA-ABI) as an efficient method that (1) uses weight sharing to capture similarities between model versions with little extra cost, (2) exploits the speed of neural networks to test sensitivity to data and preprocessing changes, and (3) applies deep ensembles to spot sensitivity caused by poor fit. Our experiments show that SA-ABI can provide automatic insights into hidden uncertainties.

Leveraging Self-Consistency for Data-Efficient Amortized Bayesian Inference

arXiv

·

24 Jul 2024

·

arXiv:2310.04395

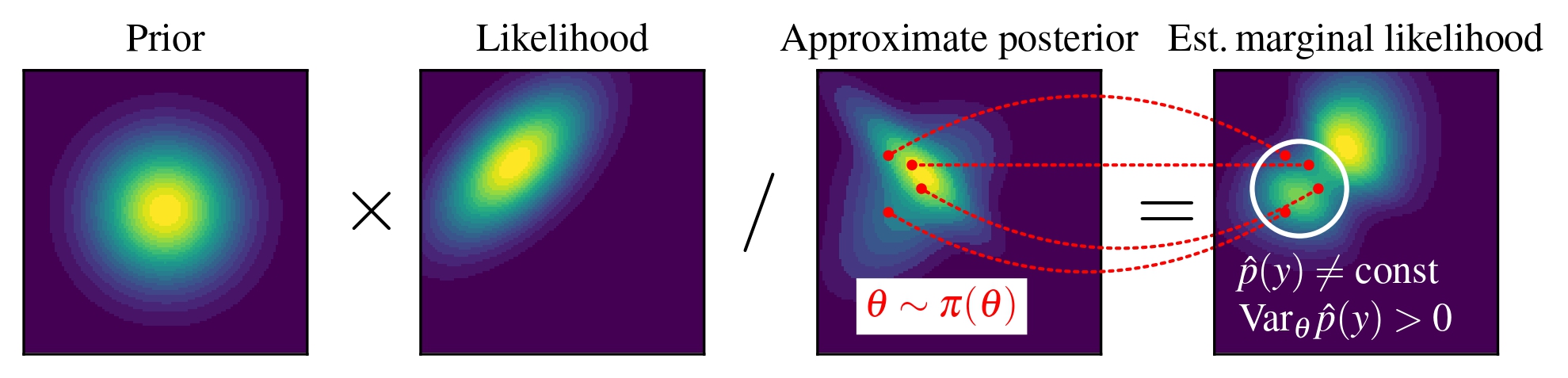

We improve amortized Bayesian inference by inverting Bayes’ theorem to estimate the implicit model evidence. We leverage the observation that deviations from constant evidence reveal approximation errors, which we penalize through a novel self-consistency loss. This loss improves inference quality in low-data regimes and boosts the training of neural density estimators across synthetic and real-world models.

Detecting Model Misspecification in Amortized Bayesian Inference with Neural Networks: An Extended Investigation

arXiv

·

07 Jun 2024

·

arXiv:2406.03154

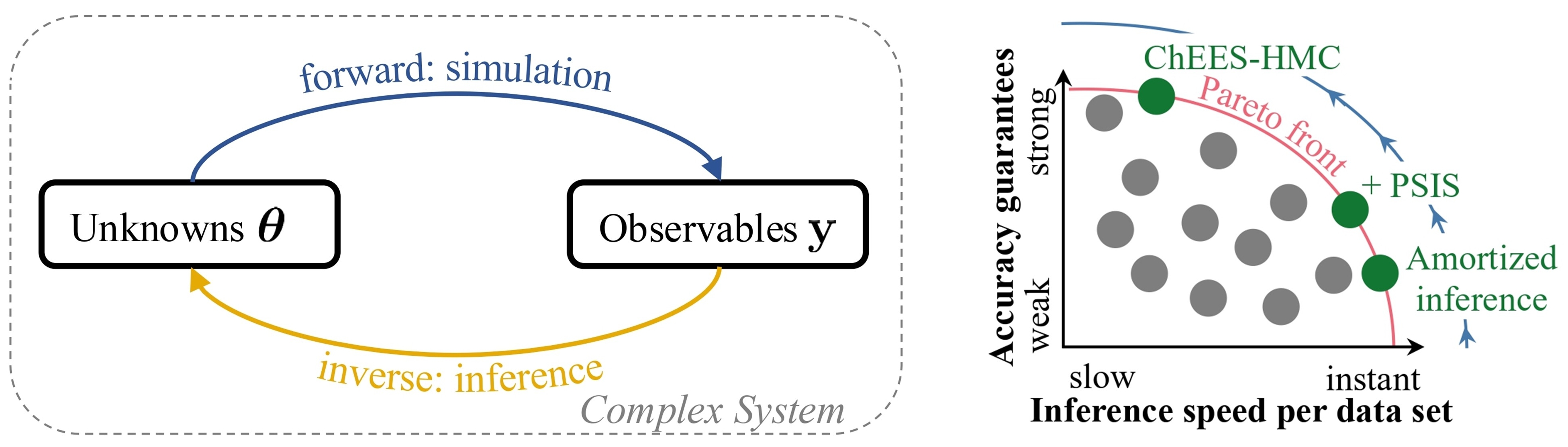

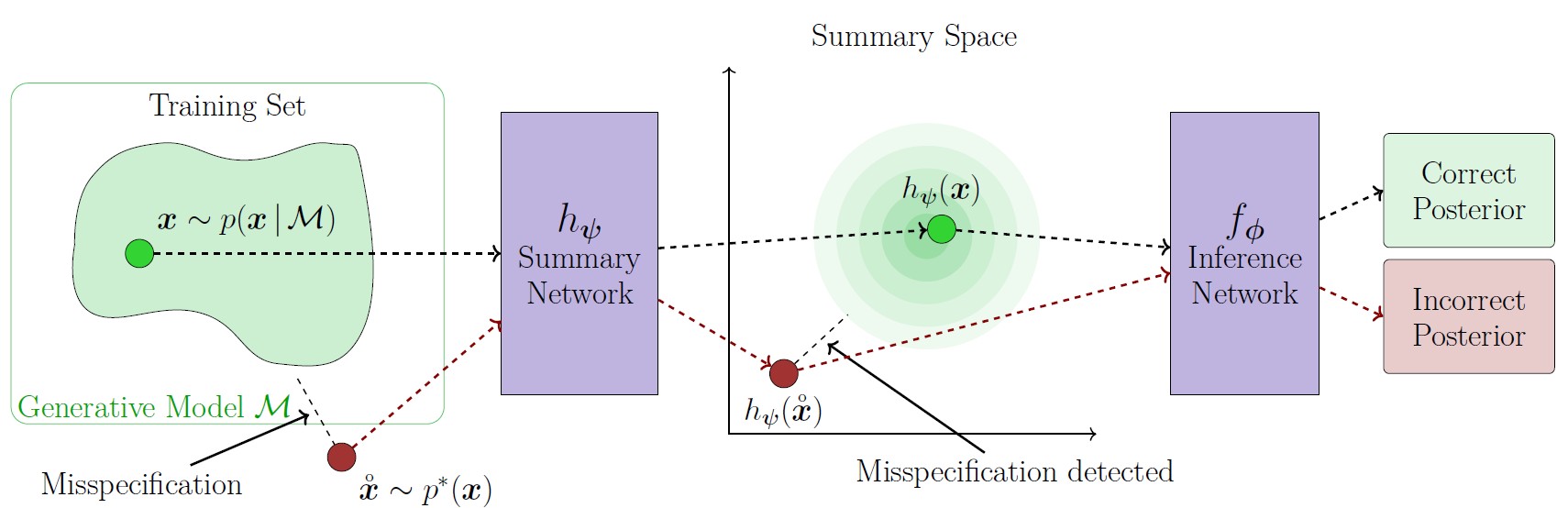

Generative AI enables efficient simulation-based inference (SBI) for complex models. But how faithful is such inference if the simulation represents reality somewhat inaccurately? We conceptualize the types of simulation gaps arising in SBI and investigate how neural network outputs gradually deteriorate as a consequence. To notify users about this problem, we propose a new measure that can be trained in an unsupervised way. We show that our measure warns users about suspicious outputs, raises an alarm when predictions are not trustworthy, and guides model designers in their search for better simulators.

A deep learning method for comparing Bayesian hierarchical models.

Psychological Methods

·

06 May 2024

·

10.1037/met0000645

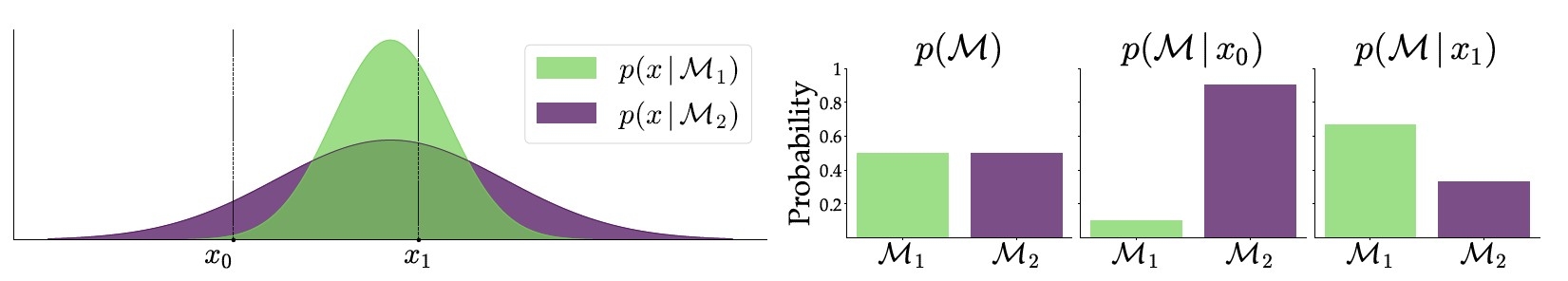

Bayesian model comparison (BMC) is a formal way to assess the relative merits of competing computational models. However, BMC is often intractable for hierarchical models due to their large number of parameters. To address this issue, we propose a deep learning method for performing BMC on any set of hierarchical models that can be instantiated as probabilistic programs. In addition to unlocking the ability to compare previously intractable models, our method enables re-estimation of model probabilities and fast performance validation prior to any real-data application.